W04 Learning Activity: Software Testing

Overview

Software testing is the process of ensuring that software works correctly and consistently. It verifies that the software has the required functionality, and is also concerned with non-functional requirements such as security and performance.

Testing is usually listed as coming after development when describing the phases of the Software Development Lifecycle (SDLC), but there has been a strong movement for testing to Shift Left by involving testing principles in the earlier stages of requirements, design, and certainly development.

Key Points to Remember:

- Testing is all about managing risk.

- Everyone on the team should think about testing and quality assurance throughout the entire SDLC.

Preparation Material

The noted computer scientist Edsger Dijkstra has famously said that testing can be used to show the presence of bugs, but never to show their absence. If it is impossible to prove that there are no more bugs, what then is the purpose of testing?

One way to answer this question is to say that testing is all about managing risk. By verifying that the product works correctly in a wide range of scenarios and conditions, you discover risks or problems that could occur. Then, by taking steps to address those issues, you reduce the risk of problems occurring in the future.

Software testing is all about managing risk.

Because testing is all about managing risk rather than proving the absence of all bugs, it might leave you with a number of questions that do not have clear yes or no answers. With this in mind, the sections of this learning activity have deliberately been labeled as questions.

Why should you test?

In managing risk, the following are key objectives of testing:

- Verify and Validate: Ensure the software functions as expected and meets the requirements specified by the stakeholders.

- Detect Defects: Identify bugs, errors, or other issues within the software to ensure it functions correctly under various conditions.

- Improve Quality: Enhance the overall quality of the software by finding and fixing issues before the product is released to users.

- Ensure Security: Test for vulnerabilities that could be exploited by malicious users.

- Ensure Performance: Verify that the software performs well under expected workloads and stress conditions.

How much testing is enough?

One of the most difficult questions to determine with regard to testing is, when have you tested a product sufficiently? Or said another way, when is it acceptable to limit the amount of testing you perform on a system?

One reason this is a difficult question is that it depends on the context and the risks associated with a particular kind of failure. For example, you may desire more robust testing for the software in an airplane, a medical device, or a financial application than you would for a non-critical app or an internal company tool. Also, even within a product there may be some components that are more critical than others.

Recognizing testing as managing risk means that you must identify potential types of failures and determine your tolerance for problems in each area.

It's a trap!

It can be very tempting to view something as non-critical and use that as an excuse to limit quality, reduce testing, or skip it altogether. You will likely regret this decision later.

The users of any system will expect it to work correctly and consistently, and if it does not, it may create serious consequences for them. This is true whether or not human lives are lost, or great financial impact occurs. Consider, for example, how negatively it would impact you if the homework submission tool occasionally lost your submission or applied an incorrect date. Or if the scales at a supermarket were occasionally wrong or not working. These may seem like non-critical systems, but they are incredibly important for their users.

As another example, consider the infamous British Post Office scandal where bugs in an accounting software system eventually led to debts, bankruptcies, criminal convictions, imprisonments, and family breakdowns.

Any software that is important enough to write is important enough to ensure that it works correctly.

What are the various types of testing?

Testing can be categorized in various ways. One way to categorize testing is to separate it into two broad categories: functional and non-functional testing.

Functional Testing

Functional testing verifies that the software functions according to the requirements and specifications. It checks whether the system behaves as expected and whether the user can perform their necessary tasks using the software. The primary focus is on ensuring that the application does what it is supposed to do.

The V-Model

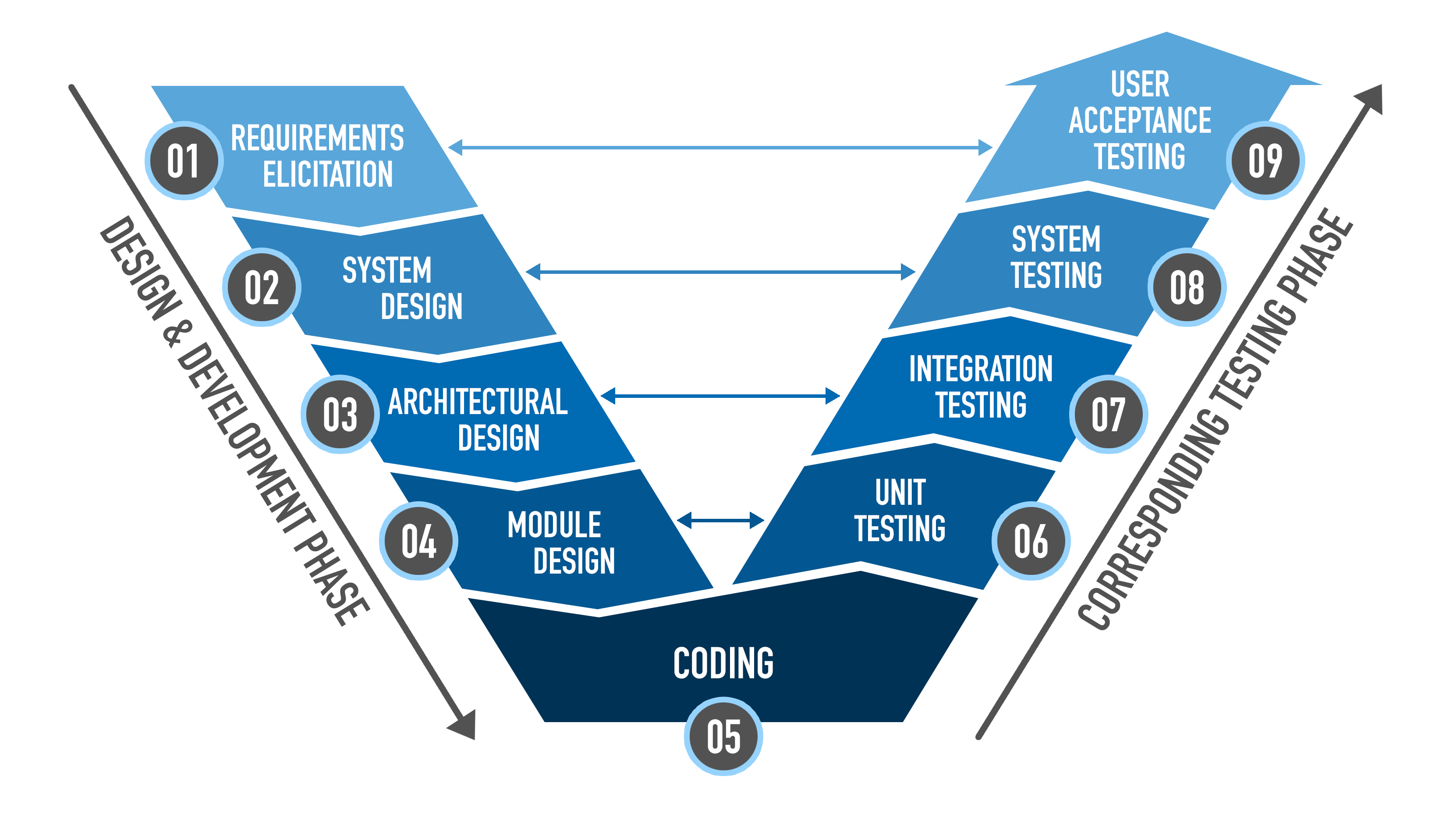

The V-Model in software development emphasizes the importance of testing at each stage of the design and development process. It specifies a direct testing phase that corresponds to each development phase, forming a V shape when depicted graphically.

The following figure shows the typical phases:

The Testing Pyramid

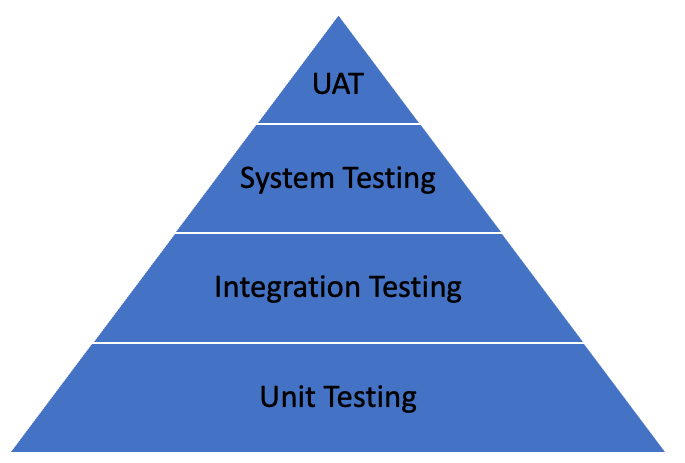

Another way to visually depict the testing phases from the V-Model is to view them as a pyramid:

The testing pyramid illustrates that tests should be layered, with a larger number of simpler, lower-level tests at the bottom and fewer, more complex, higher-level tests at the top. This approach helps ensure a comprehensive and efficient testing process.

Unit Tests

The foundation of the pyramid is unit testing. Unit tests are automated tests focused on individual functions or components. They ensure that a function or component will produce the proper output based on a given set of parameters.

There are typically a very large number of unit tests, because there are many functions in a system, and every function will be tested in multiple ways. These tests typically run very quickly because they test small pieces of code, and they are typically inexpensive to write and maintain. In addition, unit tests can also be run in a simulator or emulator, especially if the functionality requires hardware or other third-party systems.

There are established frameworks that can efficiently run all unit tests in a project and report any results. These frameworks include tools like JUnit (Java), NUnit (.NET), and PyTest (Python).

Integration Tests

Integration tests are the next level of the testing pyramid and sit on top of unit tests. While unit tests focus on testing each piece in isolation, integration test focus on making sure that the pieces work together correctly.

Integration tests are also typically automated, and there are significant number of them as well, but typically fewer than unit tests. Because integration tests cover more pieces of code, they usually run slower than unit tests. They also usually require more setup in the test code than unit tests and are more difficult to create and maintain. When working with hardware systems where a unit test is run in a simulator, the integration tests would typically be run on real hardware.

Integration tests are often run with the same tools as unit tests (such as JUnit or NUnit), but they may also include other tools such as Spring Test (Java) or using Postman for API testing.

System or End-to-End Testing

System, or end-to-end, testing rests on top of integration testing in the testing pyramid. These tests are meant to simulate real-user level scenarios that test the entire system behavior from start to finish.

There are typically fewer system tests compared to integration tests or unit tests. These system tests run longer, and are much more complex, due to their larger scope. System tests are more expensive to write, run, and maintain.

Examples of tools for system tests include Selenium (web applications), Cypress (JavaScript applications), and Appium (mobile applications).

User Acceptance Testing (UAT)

While not always included in the testing pyramid, User Acceptance Testing (UAT) verifies the application requirements directly, and therefore can be thought of as another layer above system level testing.

In UAT, the key stakeholders verify that the system works as intended and meets the requirements. UAT validates the end-to-end business flow of the system to ensure it is ready for deployment. UAT is typically performed by end users or other stakeholders that have a deep understanding of the business requirements and can effectively judge if the software meets their needs.

UAT is more robust than having end users casually click around and play with the system. It is typically a scripted experience where specific use cases are documented and followed to demonstrate each requirement.

UAT should be performed in an environment as close to the production environment as possible to identify any issues that might occur during actual use.

Non-Functional Testing

While functional testing focuses on the desired features of a system, non-functional testing evaluates the non-functional aspects of the software, such as performance, usability, reliability, security, and scalability. It ensures that the software meets certain standards and performs well under various conditions.

Performance Testing

Performance testing evaluates the speed, responsiveness, and stability of the application at scale. Load testing assesses how the application behaves under expected load conditions, and stress testing evaluates the application's limits by subjecting it to extreme conditions. Various tests can be used such as a soak test, where the software runs for an extended period of time, or a spike test where an application is given a sudden increase of load.

Performance testing is critical to understanding and mitigating risks of actual deployment, because it often uncovers issues that are not present in a development environment.

Usability Testing

Usability testing evaluate the user-friendliness of a system. It seeks to understand whether end-users can effectively find and carry out their desired features. It focuses on the user experience (UX) and how easy and intuitive the software is to use.

The Nielson Norman group defines usability by five quality components:

- Learnability: How easy is it for users to accomplish basic tasks the first time they encounter the design?

- Efficiency: Once users have learned the design, how quickly can they perform tasks?

- Memorability: When users return to the design after a period of not using it, how easily can they reestablish proficiency?

- Errors: How many errors do users make, how severe are these errors, and how easily can they recover from the errors?

- Satisfaction: How pleasant is it to use the design?

In usability testing, real users are given the software or a prototype and asked to perform certain tasks. Evaluators then record their actions, often using screen capture or audio/video recordings.

Security Testing

Security testing is a process designed to uncover vulnerabilities in a software application to ensure that data and resources are protected from potential intruders. The primary goal is to identify security risks and verify that the system's defenses are sufficient to protect against attacks.

Security testing can include testing the software, hardware, and network infrastructure to validate the effectiveness of security controls. It gives particular emphasis to ares such as authentication, authorization, data protection, and security logging.

Security testing may include the following:

- Vulnerability Scanning: Automated tools are used to identify known vulnerabilities within the system. These can scan for outdated software, missing patches, and common security misconfigurations.

- Penetration Testing (Pen Testing): Real-world attacks are simulated to identify and exploit vulnerabilities. These are performed by security experts who try to gain unauthorized access to understand the impact of potential security breaches.

- Security Auditing: A comprehensive review is made of the system's security measures, comparing them to policies, standards, and regulations. This typically involves reviewing the code and configurations directly.

- Compliance: Many fields contain additional auditing that must be done to ensure compliance with accessibility regulations, financial system standards, or other requirements.

How can tests be designed or measured?

In each of the tests above, there are considerations for designing and evaluating the tests.

Code Coverage

When running automated tests, such as unit and integration tests, it is common to track the code coverage of

these tests. Code coverage is defined as the percentage of the code that is directly covered or exercised in a

test case. For example, if a particular block of code contains an if/else statement,

there should be a test that exercises the if condition, and another that exercises the

else condition. While higher coverage generally indicates better-tested code, it is often difficult

to achieve 100% code coverage, and 100% coverage does not guarantee bug-free code, so trying to get to 100% may

not even be desired.

In addition, while some companies may have a designated threshold, you should be aware that it is easy for developers to game the system by writing lots of test cases for simple code such as getters and setters for the sole purpose of increasing the coverage beyond the necessary threshold.

Black-box versus White-box Testing

White-box and black-box testing are two fundamental approaches to software testing that differ in their focus, methodology, and the knowledge required by the testers.

White-box Testing

White-box testing, also known as clear-box or glass-box testing, is a testing approach that involves examining the internal structure, design, and implementation of the software. Testers have full visibility of the code, algorithms, and logic used in the application.

Testers must have a deep understanding of the internal workings of the application, including its source code, architecture, and logic. The tests themselves are based on the internal paths, branches, conditions, loops, and data flow of the software.

Advantages:

- Identifies internal errors and logic flaws early in the development process.

- Improves code quality by ensuring that all code paths are exercised.

- Helps optimize code by identifying redundant or inefficient sections.

Disadvantages:

- Requires testers with programming knowledge and understanding of the internal code.

- Can be time-consuming and complex, especially for large applications.

- May not effectively identify issues related to user experience or system integration.

Black-Box Testing

Black-box testing, also known as behavioral or functional testing, is a testing approach that focuses on evaluating the functionality of the software without considering its internal implementation. Testers do not need to know the internal code or structure.

Testers only need to understand the input and expected output of the application. They do not need knowledge of the internal code or logic. The tests themselves are based on the requirements, specifications, and user stories. The focus is on the external behavior of a component or the application.

Advantages:

- Does not require knowledge of the internal code, making it accessible to testers without programming skills.

- Focuses on the end-user experience and functional requirements.

- Effective in identifying issues related to functionality, usability, and performance.

Disadvantages:

- May not uncover internal code issues or logic errors.

- Limited coverage of the internal code structure.

- Can miss edge cases that require knowledge of the internal implementation.

Boundary Value Analysis

Boundary value analysis, is a testing technique that focuses on the values at the boundaries of input domains. The idea is that errors often occur at the edges of input ranges rather than in the middle.

As an example, consider an input field that accepts values between 1 and 100 (inclusive). The boundary values would be 1 and 100, which would yield the following test cases:

- 0 (just below the lower boundary)

- 1 (at the lower boundary)

- 2 (just above the lower boundary)

- 99 (just below the upper boundary)

- 100 (at the upper boundary)

- 101 (just above the upper boundary)

Equivalence Partitions

A related idea to boundary value analysis is the concept of equivalence partitioning, which divides the input data into partitions of equivalent data from which test cases can be derived. It assumes that all inputs in a particular partition will be treated similarly by the software.

The main idea is that groups of input data that are expected to be processed in the same way, so that you should test one ore more representatives from each equivalence partition, but you can reduce the amount of overall testing because you do not need to extensively test multiple conditions within the same partition.

For example, consider an input field that accepts values between 1 and 100 (inclusive). This can be divided into three equivalence classes as follows:

- 1-100 (valid input)

- Less than 1 (invalid input)

- Greater than 100 (invalid input)

Then, you can choose a representative sample from each of these. The boundary value analysis will already explore the classes, but you may also choose a less extreme value such as 50.

What are some other processes for ensuring code quality?

In addition to the testing described above, there are additional processes that developers can use to ensure the quality of the code. These can include the following:

- Code Reviews: Peer reviews where other developers examine code changes before they are merged into the main codebase. Code reviews identify defects early, improve code readability, and facilitate knowledge sharing.

- Pair Programming: Two developers work together at a single workstation, one writes code while the other reviews each line as it is written. Programming in this way facilitates immediate code reviews, improved code quality, and enhanced collaboration.

- Static Code Analysis: Uses tools to analyze code for potential errors, and adherence to coding standards without executing the program. These tools help identify issues that might not be caught during manual reviews or testing.

Where should tests be run?

Tests should be run in environments that closely resemble the production environment to ensure accurate and reliable results. The choice of where to run tests depends on the type of test and its specific objectives.

The following are common environments for running tests:

- Local Development Environment: This is best suited for unit tests run by developers to get quick feedback as they are writing code. It is typically limited to unit tests and some integration tests.

- Continuous Integration Environment: During the automated build process, tests are also run. These tests include unit, integration, and regression tests to ensure that new changes do not break existing functionality.

- Staging, or Pre-production Environment: After the continuous integration process, code is often deployed to a staging environment that mirrors the production environment as closely as possible, including similar hardware and configurations, but without live user traffic. This staging environment gives an opportunity to perform end-to-end tests, performance tests, and user acceptance tests, in an environment that is highly representative of the final production environment.

- Production Environment: After the application has been deployed, a certain amount of verification should be done in the production environment itself. This also includes monitoring, logging, and A/B testing. These topics will be discussed more in the lesson on Release and Maintenance. Any testing in the production environment should be limited to non-intrusive tests to avoid disrupting users or negatively impacting live data.

Other strategies for testing new features in the production system include Canary Deployments, where a new feature is rolled out only to a restricted subset of users where it can be tested more thoroughly, or Blue/Green deployments where two versions of the system are live at the same time, allowing new features to be tested and monitored.

Simulation Test Environments

In addition to these environments, simulations can also play an important role in testing, especially when it is impractical to replicate certain conditions or behaviors in a test environment. However, they have their limitations and should be complemented by real environment testing.

Simulations can be especially useful in testing particular hardware configurations. They can also help mock or simulate external components of a system that may not be able to easily be incorporated into a testing environment. Further, a simulation could be used to simulate user behavior in extreme or high-load situations, but these simulations may not exactly mimic true behavior of a system, so they should be used only as a part of an overall strategy.

While simulations are a valuable tool in the testing process, they are not a substitute for testing in real or near-real environments. A balanced approach, using a combination of local, continuous integration, staging, and production environments, along with simulations, provides effective coverage and ensures software reliability. This layered approach helps identify issues early, ensures code quality, and validates the software's performance and behavior under real-world conditions.

How can you account for each of the requirements?

Test cases and results should be able to trace back to their origins, such as requirements, design documents, and source code. This ensures that all aspects of the software are tested, all requirements are covered, and any changes can be tracked throughout the development lifecycle.

Traceability Matrix

A traceability matrix is a document, often a table or spreadsheet, that maps and traces user requirements with test cases. It ensures that all requirements have corresponding test cases, identifies missing or redundant tests, and tracks the status of each requirement throughout testing.

Example:

| Requirement ID | Requirement Description | Test Case ID | Test Case Description | Status |

|---|---|---|---|---|

| R1 | Login functionality | TC1 | Verify login with valid credentials | Passed |

| R2 | Password reset | TC2 | Verify password reset process | Failed |

Types of Traceability

Traceability can be made either forward, backward, or both:

- Forward Traceability: Tracing requirements to test cases. Ensures each requirement is covered by tests.

- Backward Traceability: Tracing test cases back to requirements. Ensures each test case has a clear purpose and corresponds to a requirement.

- Bidirectional Traceability: Combines both forward and backward traceability to ensure comprehensive coverage and consistency.

Benefits of Traceability

There are a number of benefits to traceability, including the following:

- Requirement Coverage: Ensures all requirements are tested and met.

- Impact Analysis: Identifies the impact of changes in requirements on test cases and vice versa.

- Defect Tracking: Helps trace defects back to their originating requirements, making it easier to understand and fix issues.

- Accountability: Provides a clear audit trail of testing activities and decisions, improving transparency and accountability.

- Regulatory Compliance: Essential for industries with stringent regulatory requirements, ensuring that all necessary testing is documented and verifiable.

Implementing Traceability

Traceability should be implemented throughout the software development lifecycle (SDLC), from requirements gathering, to design, coding, testing, and maintenance. Various tools and software can help manage traceability, such as test management tools (for example, JIRA, TestRail) and requirements management tools.

Example Scenario

Imagine you are working on an e-commerce application with a requirement to implement a secure checkout process. The following are examples of requirements and test cases and their mapping.

- Requirement: Implement a secure checkout process (Requirement ID: R3).

- Design: Create a design document outlining the checkout process flow and security measures.

- Test Cases: Develop test cases to verify each aspect of the checkout process.

- TC3.1: Verify that the user can add items to the cart and proceed to checkout.

- TC3.2: Verify that the user can enter shipping and payment information securely.

- TC3.3: Verify that the user receives an order confirmation after successful payment.

- Traceability Matrix: Link these test cases back to the requirement (R3) to ensure complete coverage.

| Requirement ID | Requirement Description | Test Case ID | Test Case Description | Status |

|---|---|---|---|---|

| R3 | Implement secure checkout | TC3.1 | Verify adding items to the cart and proceeding to checkout | Passed |

| TC3.2 | Verify secure entry of shipping and payment information | Passed | ||

| TC3.3 | Verify receipt of order confirmation post-payment | Passed |

What is the mindset of a tester?

Software teams can be organized in different ways, with some having unique roles for testers or others expecting developers to also perform the testing role. In either case, it is important to recognize that testing often requires a very different mindset than it takes to develop software. While developers focus directly on the problem-solving that results in solutions to the customer's needs, testers use a different kind of problem-solving skills that require them to analyze the possible points of failure.

The following are some key components of the mindset of a tester.

- Focus on Quality Assurance: Testers ensure the software meets quality standards and requirements. They look for defects, inconsistencies, and deviations from expected behavior.

- Attention to Detail: Testers scrutinize the software closely to find edge cases and subtle issues. They pay attention to small details that might affect the overall functionality and user experience.

- User Perspective: Testers think from the perspective of the end-user to understand potential usability issues. They have empathy for the user and consider how users will interact with the software and identify possible points of frustration or confusion.

- Skeptical and Critical Thinking: Testers operate under the assumption that there are bugs to be found. They challenge assumptions made during the development process and explore scenarios that might not have been considered.

- Analytical Approach: Testers use structured methodologies to test the software comprehensively. They investigate the root causes of defects to prevent recurrence.

- Collaboration and Communication: Testers provide detailed feedback to developers on defects and areas for improvement. They maintain clear and thorough documentation of test cases, results, and issues found.

- Adaptability: Testers must adapt to new testing tools, techniques, and methodologies to improve testing processes. They learn from failures and use past experiences and failures to enhance future testing efforts.

When might you choose not to fix a bug?

It might sound odd, but choosing not to fix a bug can be a strategic decision based on various factors. Here are some scenarios where a team might decide not to fix a bug:

-

Low Impact

- Minor Issue: The bug has minimal impact on the overall user experience or functionality of the application.

- Workaround Available: There is an easy and effective workaround that users can apply to avoid the issue.

-

Low Priority

- Rarely Encountered: The bug occurs very infrequently and does not affect a significant number of users.

- Non-Critical Area: The bug is in a non-critical part of the application that is not frequently used.

-

High Cost of Fixing

- Complex Fix: The effort required to fix the bug is disproportionately high compared to its impact.

- Risk of Regression: Fixing the bug might introduce new issues or destabilize other parts of the application.

-

Resource Constraints

- Time Constraints: There is a tight deadline, and the team needs to prioritize more critical tasks.

- Budget Constraints: Limited budget resources make it necessary to focus on higher-priority issues.

-

Upcoming Changes

- Planned Redesign: The affected component is scheduled for redesign or replacement, and the bug will be addressed in the process.

- Deprecation: The feature or module containing the bug is planned to be deprecated soon.

-

User Acceptance

- No User Complaints: Users have not reported the bug, indicating it may not be significantly impacting them.

- Acceptable Behavior: The current behavior, though a bug, might be acceptable or even preferred by some users.

-

Strategic Decisions

- Business Priorities: The focus may be on new features, enhancements, or strategic goals that take precedence over fixing the bug.

- Competitive Advantage: Resources might be better allocated to features that provide a competitive advantage rather than minor bug fixes.

-

Non-Reproducibility

- Cannot Reproduce: The bug cannot be consistently reproduced, making it difficult to identify and fix.

Who is responsible for ensuring that the software works correctly?

While it might be easy to place responsibility for the software working correctly on end users through User Acceptance Testing, or on testers or quality assurance engineers, the truth is that every person throughout the SDLC process bears responsibility.

In this regard, testing should be considered in every aspect of the SDLC, not just the testing phase. The idea of testing being thought of in each phase has been recognized for a long time, but it has recently risen to prominence through a movement called Shift Left. The main idea of Shift Left is that as you define requirements, you think about how to test them. As you create the design, you think about how to test it. As you write code, you should be testing and thinking about how to verify the code that you are writing.

If you are the developer on a project, you should have the attitude that you are principally responsible for ensuring that your code is correct. You cannot simply pass this responsibility to a tester or anyone else.

Conclusion

As you can tell from the number of topics covered in this lesson, testing is a robust area that cannot be covered in a single week. Whole courses, programs of study, and career paths are devoted to better understanding how to test software.

Continue the conversation

After completing this reading, ask 3-5 follow up questions about software testing to an AI system of your choice. (You may use ChatGPT, Bing, Claude AI, Gemini, or any other system of your choosing.)

Good questions may include:

- Explain ______ (for example, white-box testing, performance testing, soak testing)

- What kinds of bugs can be uncovered by ________ testing?

- What is the difference between ________ and _______ ?

- What kinds of bugs might still exist, even after ________ ?

- Why is software testing difficult?

Submission

After you are comfortable with these topics, return to Canvas to take the associated quiz.

Other Links:

- Return to: Week Overview | Course Home

ChatGPT assisted in the creation of this learning material.