6.6 Using AI to Create Automated Tests

AI in Testing

Using AI tools like ChatGPT to generate automated tests from code is an innovative application of natural language processing (NLP) and machine learning. ChatGPT and other Large Language Model (LLM) tools can be used to effectively help the tester in a wide variety of software testing specific tasks. Here's an overview of how this might help:

Understanding The Test Requirements

Developers or QA engineers can use ChatGPT to describe the functionalities they want to test in natural language. ChatGPT can then assist in refining and gathering detailed specifications for test scenarios and cases.

Test Scenario Generation

Developers can use ChatGPT to convert natural language descriptions of test scenarios into code snippets. This might involve translating high-level requirements into specific testing functions or assertions.

Test Case Generation

ChatGPT can be used to generate parameterized test cases based on different input combinations and expected outcomes. For instance, it could assist in creating data-driven tests with various input values.

Code Skeletons and Templates

ChatGPT can help generate initial code skeletons or templates for testing modules, classes, or functions. Developers can then fill in the details based on specific use cases. This is particularly useful for testers who don't have a strong background in coding, but have a strong set of test cases to evaluate.

Automation Framework Integration

The generated code can be integrated into popular automation testing frameworks such as Selenium (for web applications), JUnit or TestNG (for Java-based applications), or others like Pytest. Developers can customize the generated code to fit the chosen testing framework.

Iteration and Refinement

Developers and ChatGPT can engage in an interactive process, refining and iterating on the generated test code. This may involve clarifying ambiguous requirements or making adjustments based on developer feedback.

A Specific Example

Test Case Generation

To demonstrate some of the process explained previously, let's consider a specific example. In this example you are working on a module that calculates the volume of certain types of solids. Here is some code below that you have written to accomplish this.

import math

def get_cylinder_volume(radius, height):

return math.pi * height * radius ** 2

def get_sphere_volume(radius):

return 4 / 3 * math.pi * radius ** 3

def get_cube_volume(side):

return side ** 3

def get_tank_volume(length, width, height):

return length * width * heightYou know it's good programming practice to write unit tests for your code, but you didn't use test-driven development. You would like to generate some useful test cases to make sure your code works before you check it in.

Using ChatGPT, you can get help. Here is a prompt you could use describing your function in plain English:

I have created a python file with four functions that compute the volume of a cylinder, sphere, cube and tank. What test cases should I generate for these functions?

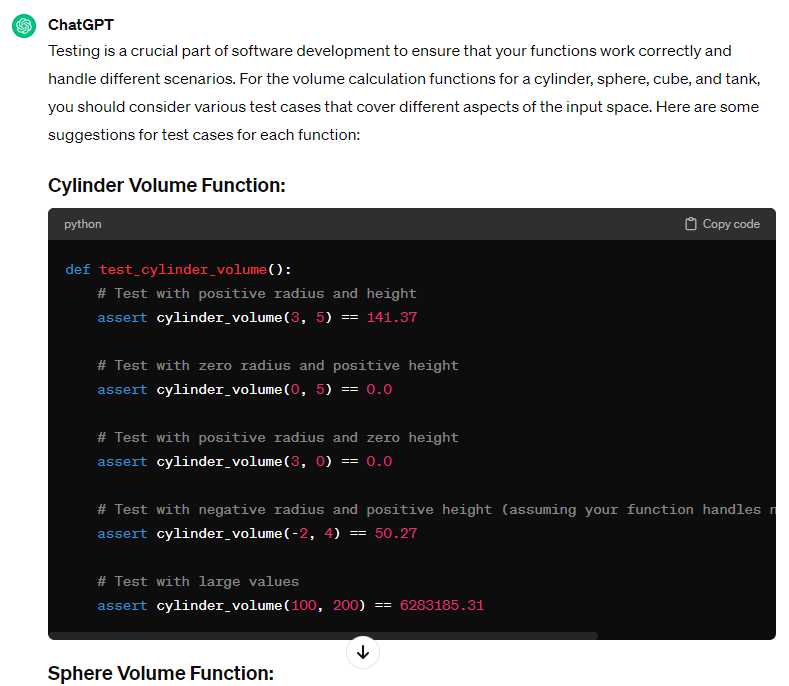

ChatGPT dutifully responds by giving you a few test cases you can use.

At the end ChatGPT gives us a valuable warning.

Make sure to adapt these test cases based on the specifics of your implementation and requirements. Additionally, consider edge cases and boundary values to ensure robustness and correctness in various scenarios.

Indeed, you will notice that ChatGPT guessed our function name was “cylinder_volume” when it's actually “get_cylinder_volume”. We will have to make adjustments to this code in order to use it to test.

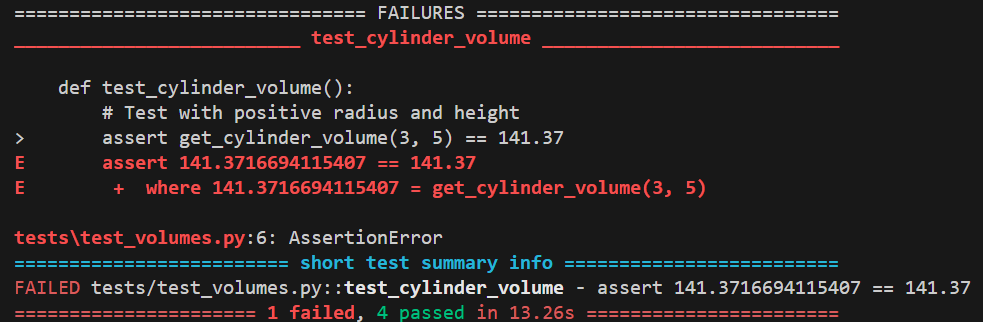

Once you copy this code in and adjust it to call the correct function names, you will notice that the tests don't pass because the expected resolution of the function call doesn't match. This is a common occurrence because ChatGPT may be missing the kind of information that enables it to generate good tests for our circumstances.

To correct this situation we will likely have to modify the tests ChatGPT gave us, or we need to give ChatGPT more specific information about tolerances.

Using Code Samples

Another possibility is to copy the code directly into ChatGPT and ask it to generate sample tests for us. When you do this, ChatGPT has more context to work with. In this example, the code is easily identified as code that calculates the volume of different shapes. More complex functions may not be as easily identified. Here's an example prompt:

Evaluate the code I wrote below and generate test cases for them:

import math

def get_cylinder_volume(radius, height):

return math.pi * height * radius ** 2

def get_sphere_volume(radius):

return 4 / 3 * math.pi * radius ** 3

def get_cube_volume(side):

return side ** 3

def get_tank_volume(length, width, height):

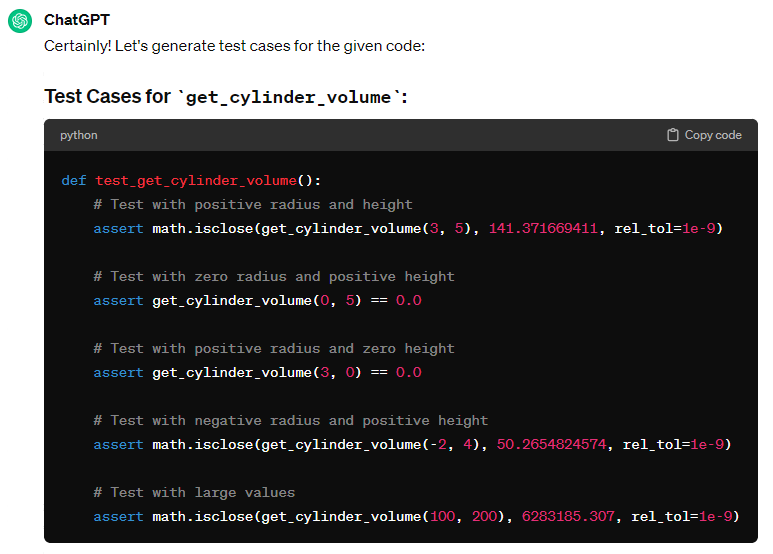

return length * width * heightOnce again, ChatGPT gives us some test cases we can use, but the results are somewhat different.

This time, ChatGPT gives us code that uses the math.isclose() function to approximate values that have a lot of significant digits. This corrects the problem from earlier.

Iterating and Refining

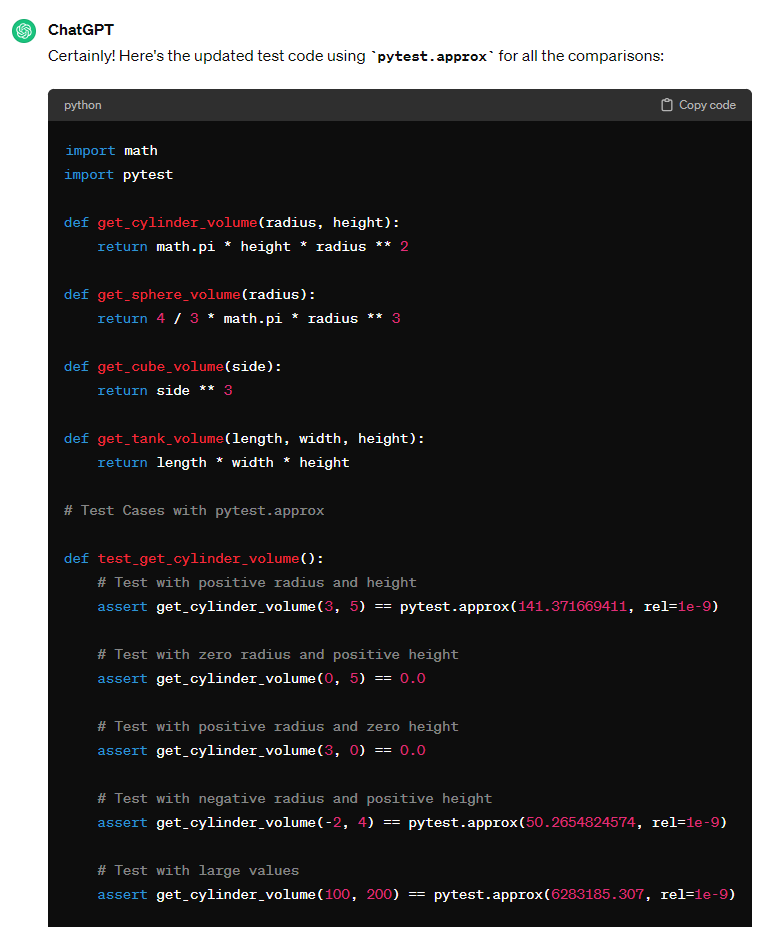

Suppose we use Pytest as our testing framework at our company. We didn't tell ChatGPT that we intend to use Pytest as our testing framework, so it didn't have that context. The Pytest framework includes the approx() function which works like the isclose() function from math to compare floating point numbers. We can update the generated tests using another prompt:

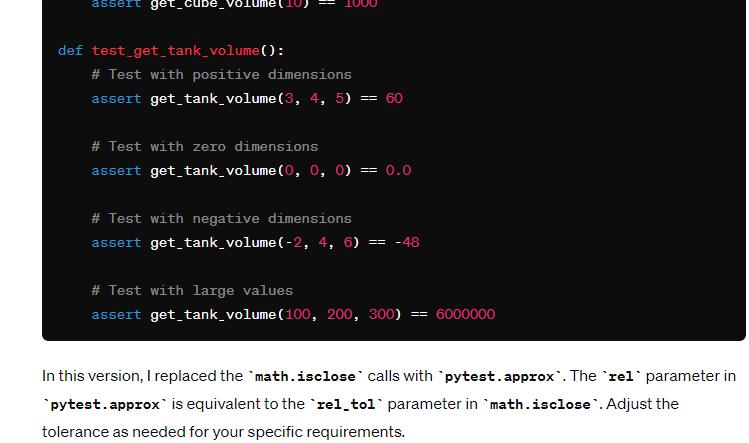

Update the test code so that it uses pytest.approx instead of math.iscloseChatGPT will give you an updated version of all your tests and explain to you what it did.

Tools like ChatGPT can give us a great head start on our testing, but we often need to evaluate and refine the results, either by giving the AI more detail about what we want, or accepting the output as given and making modifications to complete the job. Using tools like ChatGPT does not remove the responsibility for creating good test cases from the tester. We must evaluate the tests that were generated and determine if the coverage is good enough for our needs.

Useful Links: ←Unit 6.5 | Unit 7.1→ | Table of Contents |